A load balancer, also known as an application delivery controller (ADC) is a physical or virtual network device, software, or cloud service that distributes a large volume of incoming traffic from users and applications across fewer applications servers and services. The objective of a load balancer is to provide high availability by using alternate application resources in case the initial one becomes unavailable, enable efficient utilization of both network and application server resources, and reduce the time it takes to respond to user requests for reduced latency and high performance. It acts as a traffic director sitting in front of your applications, routing client requests across all application servers capable of fulfilling those requests in a manner that maximizes speed and capacity utilization. This ensures that no one server is overworked, which could degrade performance. If an application server instance goes down, the load balancer redirects traffic to other available application servers. When a new application server instance is added to the server group, the load balancer automatically includes the new application instances in its distribution algorithm.

The Concept of Load Balancing

Load balancing refers to efficiently distributing incoming network traffic across a group of application servers, also known as a server farm or server pool. This process reduces the strain on each application server instance, making the application servers more efficient, speeding up their performance and reducing the time taken to respond to users. Load balancing is essential for most web-facing applications to function properly. By distributing user requests among multiple servers, user wait time is vastly cut down, resulting in a better user experience.

Load balancing optimizes resource utilization in cloud computing and ensures that no single resource is overburdened with traffic by distributing workloads across multiple computing resources, such as servers, virtual machines, or containers, to achieve better performance, availability, and scalability. In cloud computing, load balancing can be implemented at various levels, including the network layer, application layer, and database layer.

In a cloud-based deployment, load balancers may also improve the scalability of the applications and reduce the cost of operation by automatically bringing new application servers in and out of rotation as required by the number of user requests being served.

Load balancers are key to cybersecurity, providing defense against malicious attacks against applications and network. It protects against SYN floods, application and network level DDoS attacks, as well as attacks on web-facing applications. DDoS traffic may be rerouted to a dedicated DDoS protection appliance or service if the load balancers detect malicious attempts to flood applications with spurious requests, attempt to consume connection and CPU resources, or if a server becomes vulnerable due to the volume of malicious requests.

Many load balancers also come integrated with web application and API protection solutions (WAF and WAAP) and can also look at users’ requests to detect any attempts to send malicious data to applications.

Load balancers eliminate single points of failure, reduce the application attack surface, and prevent resource exhaustion and link saturation.

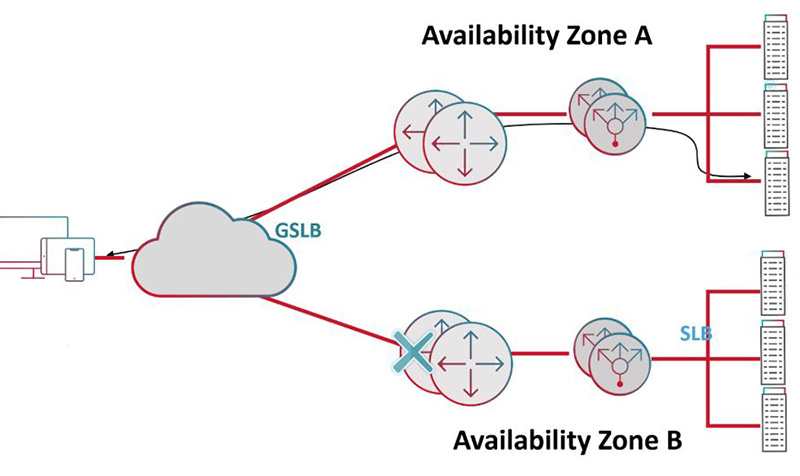

In application delivery, load balancers act as the single point of contact for users and clients, distributing requests across multiple targets like AWS EC2 or Azure application instances in different availability zones and regions. This capability, usually referred to as server and global server load balancing, enhances application availability and fault tolerance, and helps in disaster recovery.

Traffic distribution is managed by adding “listeners” to the load balancer. These listeners, configured with specific protocols and ports, forward requests to a target group of registered applications. When a rule is satisfied for a listener, traffic is forwarded to the corresponding target group of applications. Load balancers can create multiple target groups for different request types and create multiple rules to match the content of user requests to those target application groups.

The load balancer continuously monitors the health of all registered application servers in an enabled availability zone and routes requests to healthy targets. Before using your load balancer, you must add at least one listener. The content rules for your listeners determine how the load balancer routes requests to registered targets like Google Cloud Platform, Microsoft Azure, or Amazon EC2 application instances.

Listeners support various protocols and ports. An HTTPS listener can offload encryption and decryption of TLS /SSL to your load balancer, allowing your application servers to focus on their business logic. If the listener protocol is HTTPS, you must deploy at least one SSL server certificate on the listener. Listeners enhance application performance, reliability, and availability by checking for client connection requests and distributing these requests to various targets.

Load balancing is important because it involves periodic health checks between the load balancer and the host machines to ensure they receive client requests. If one of the host machines is down, the load balancer redirects the client request to other available devices. Load balancers also remove faulty servers from the pool until the issue is resolved.

The benefits of load balancing include reduced downtime, scalability, redundancy, flexibility, and efficiency of applications. It helps to distribute workloads across multiple application resources, which reduces the load on individual application resources and improves the overall performance of end-to-end traffic. Load balancing ensures that there is no single point of failure in the system, thus providing high availability and fault tolerance to handle application server failures. It also makes it easier to scale resources up or down as needed, which helps to handle spikes in user traffic or changes in demand. Furthermore, load balancing ensures that resources are used efficiently, which reduces wastage and helps to optimize costs.

Load balancing can use one or more of the following methods:

Software Load Balancing:

Software load balancers, also called virtual load balancers, offer the same functionality as hardware load balancers but do not require a dedicated physical device. Software load balancers are applications installed on application servers or delivered as a native and/or managed cloud service. They route network traffic to different application servers by examining application-level characteristics like the IP address, the HTTP header, and to the specific listeners based on rules to match the contents of the request.

Hardware Load Balancing:

The primary goal of hardware load balancing is high performance. Hardware load balancers are physical devices that distribute network traffic across multiple application servers. Hardware load balancers use dedicated CPUs, hardware decryption, along with encryption hardware and specialized device drivers to speed up request and response processing, thus enabling high performance.

Network vs. Application Load Balancing:

Network Load Balancers (NLBs) operate at the transport layer (Layer 4 of the OSI model) and are designed for high performance, handling millions of requests per second with very low latencies. They are best suited for load balancing of TCP traffic.

Application Load Balancers (ALBs), on the other hand, operate at the application layer (Layer 7 of the OSI model). They are intended for load balancing of HTTP and HTTPS traffic and provide advanced features that enable traffic routing based on advanced traffic routing rules and content-based routing rules.

Use-cases for Network-Level Load Balancing:

Network-level load balancing is particularly useful when you need to support high-volume inbound TCP requests.

Use-cases for Application-Level Load Balancing:

Application-level load balancing is beneficial when you need to distribute incoming application traffic across multiple application servers, such as AWS EC2 or Azure VM application instances in multiple availability zones and regions, which increases the availability of your application and improves disaster resilience.

The main types of load balancing algorithms are:

Round-Robin Technique:

The Round-Robin technique is one of the simplest methods for distributing client requests across a group of servers. Going down the list of servers in the group, the load balancer forwards a client request to each server in turn. When it reaches the end of the list, the load balancer loops back and goes down the list again. The main benefit of round-robin load balancing is that it is extremely simple to implement.

Weighted Round-Robin:

Weighted Round-Robin is an advanced load balancing configuration. This technique allows you to point records to multiple IP addresses like basic Round-Robin but has the added flexibility of distributing weight based on the needs of your domain. The servers assigned a higher weight get allocated a higher percentage of the incoming requests.

IP Hash:

IP Hash is a sophisticated load balancing technique that employs a specific type of algorithm known as a hashing algorithm. This algorithm plays a crucial role in managing network traffic and ensuring an even distribution of network load across multiple servers. The process begins when a data packet arrives at the network.

Least Connections Method:

The Least Connections method is a smart way to balance the workload among servers by directing each new user request to the server with the least number of active connections. This dynamic adjustment ensures efficient resource utilization and contributes to the overall performance and reliability of the network.

Adaptive Algorithms for Enhanced Performance:

Adaptive load balancing makes decisions based on status indicators retrieved by the load balancer from the application servers. The status gets determined by an agent running on each server. The load balancer queries each server regularly for this status information and then appropriately sets a dynamic weight for each server.

The benefits of load balancing are numerous and include improved performance, scalability, and reliability:

Scalability:

Load balancing makes it easier to scale application resources up or down as needed, which helps to handle spikes in traffic or changes in demand and therefore saves on cost, especially in a cloud deployment where licensing is a pay-as-you-go (PAYG).

Improved Performance:

Load balancing helps to distribute the workload across multiple resources, which reduces the load on each application resource and improves the overall performance of the system.

Application Delivery:

In the context of application delivery, a load balancer serves as the single point of contact for clients. It distributes incoming application traffic across multiple application instances, deployed as software, hardware or cloud service instances, in multiple “availability zones” and regions to increase the availability of your application.

Cybersecurity:

In terms of cybersecurity, load balancers offer an extra layer of protection against network and application DDoS attacks and protect applications from malicious requests. Load balancers can help remove single points of failure, minimize the attack surface, and make it more difficult to exhaust resources and saturate links.

Reliability:

Load balancing ensures that there is no single point of failure in the system, which provides high availability and fault tolerance to handle application server failures.

Load balancing, while essential for distributing network traffic and ensuring optimal performance, can introduce several security challenges, including:

Single Point of Failure:

While load balancing helps to reduce the risk of a single point of failure, it can also become a single point of failure if not implemented correctly. To prevent single points of failure for load balancers, they need to be deployed in an active-passive, active-active or in a cluster configuration. Many virtualization platforms enable configuring load balancers using templates, such as scale-sets in Azure, to enable high availability and scalability of network and application load balancers.

Security Risks:

If not implemented correctly, load balancing can introduce security risks such as allowing unauthorized access or exposing sensitive data. To protect against unauthorized access, default passwords for administration must be changed, regular patches should be applied, and administrator privileges must be strictly controlled. Many load balancers provide separate user, administrator, and viewer roles to further enhance security and protect against excessive permissions.

Vulnerabilities:

There can be vulnerabilities in the load balancer itself, its configuration, or its use. Enforce patching and keeping track of any Common Vulnerabilities and Exposures (CVE) announcements.

For web-facing applications, load balancing is often employed to distribute user and application requests among several application servers. This reduces the strain on each application server and makes them more efficient, speeding up performance and reducing latency for the user. By distributing a large number of user requests among fewer application server instances, user wait time is vastly cut down, application server resources are more efficiently utilized, resulting in a better user experience and reduces cost of application delivery.

Load balancing helps to improve the overall performance and reliability of applications by ensuring that resources are used efficiently and that there is no single point of failure. It also helps to scale applications on demand and provides high availability and fault tolerance to handle spikes in traffic and across application server failures.

Load balancers improve application performance by directing requests to servers based on a chosen distribution algorithm, reducing response times, and improving user experience. It allows for processing more user requests with fewer application servers, prevents single-point failure, and ensures optimal server utilization, leading to cost savings.

By routing the requests to available application servers, load balancing takes the pressure off stressed servers and ensures optimal processing, high availability, and reliability. Load balancers dynamically add or drop servers in case of high or low demand. That way, it provides flexibility in adjusting to demand and reduces the cost of operation.

Radware’s Load Balancers for Application Delivery

Radware provides advanced and comprehensive load balancing solutions and application delivery capabilities to ensure optimal service levels for applications in virtual, cloud, and software-defined data centers, to cater to various business needs:

- Alteon offers completely isolated, PCI compliant environments to enable consolidation of multiple load balancers even in the entry-level platform. Alteon provides the same functionality, user interface, and codebase regardless of the form factor—whether physical, virtual, or in the cloud.

- Alteon is an application delivery controller (ADC) solution that provides local and global server load balancing for all web, cloud, and mobile applications.

- With Global Elastic Licensing (GEL), Radware provides complete flexibility and cost control as you move from physical appliance to virtual or cloud. GEL allows you to reclaim the capacity from any form factor no longer in use, covers virtual, physical and cloud (AWS and Azure) and can be increased or decreased in 1GB increments. The reclaimed capacity may be redeployed in another environment. This capability enables our customers to transition to cloud from physical or virtual deployment without risk.

- Alteon provides a unified security with integrated Web Application Firewall (WAF) and API Protection (WAAP), Defense Messaging, Authentication Gateway, and Inbound and Outbound SSL Inspection. For protection of APIs, Radware WAF also includes integrations with Radware Bot Manager (available as a SaaS application) and our attack intelligence feed.

- To extend and customize Alteon capability, scripting functionality using TCL called AppShape++ may be deployed. Unlike our competition, Radware adds reusable TCL scripts as Alteon built-in functionality in major releases, which makes them easily supportable across version upgrades, maintainable and faster than interpreted scripts.

- Radware’s integrated WAF solution, available on all Alteon platforms, can be deployed in a unique out-of-path deployment, and provide an adaptive policy generation engine that offers greater coverage and lower false positive rates versus competition.

- Alteon provides automation out-of-the-box to streamline the entire lifecycle of the application delivery with no integration efforts required. Alteon also integrates with many leading orchestration systems including VMware VRO, Cisco ACI, OpenStack Heat, HP Network Automation, Ansible, and many others.

These capabilities leverage various load-balancing algorithms to efficiently distribute network traffic and optimize application performance. Radware's load balancers are designed for the network operator (both ADC experts and non-experts) for automation of various operational tasks, significantly reducing the operational workload throughout the ADC services’ lifecycle.

Choosing the right load balancer for your organization is a critical decision that can significantly impact its performance and security. The main factors to consider when choosing a load balancer are:

Evaluate criticality and security requirements: Consider the importance of the applications you're balancing and the level of security they require.

Seek flexibility and scalability: Look for solutions that can easily scale up or down based on your changing needs.

Identify immediate and long-term needs: Understand your current requirements and anticipate future needs.

Compare price points and features: Perform a cost analysis considering both capital expenditures (CapEx) and operating expenses (OpEx).

Assess traffic type and volume: Evaluate the type and volume of traffic your business handles.