Server Load Balancing Auto Scale

The difference between planned scaling and dynamic scaling

We all know that Application Delivery Controller facilitates scale, availability and added value service across the delivery chain. Moreover, ADC 101 for capacity planning is – plan for your max and then some. But as digital transformation spreads fast across all digital assets and interactions, we are starting to observe a new kind of scale requirements – dynamic scale.

Consider the following use cases:

News Web Application – You have a news web application hosted on a cloud platform and a high profile unexpected new event just happens and all the world is now trying to access your site to stream and get that content, resulting outcome with dramatic traffic increase.

E-commerce Website – One of the leading fashion bloggers is promoting an item published on your website with a sudden surge of thousands of sessions knocking on your site trying to get that piece of fashion you are selling.

So, what are your planning options?

You could maintain the maximum planned ADC instances to serve that high surge. If your resources have no cost – that is a valid option.

But you could also define those super spikey applications to be “dynamic” – meaning you do not pre-allocate for the maximum, but for the average normal traffic and allow them to grow on demand.

In Alteon ADC we call it Auto-Scaling: Load balancer auto-scaling is used to add more load balancer instances and scale out when the website experiences high unplanned traffic, ensuring it can dynamically handle the load.

What is SLB Auto scale

Server Load balancer auto-scaling is a capability that allows you to dynamically adjust the capacity or number of your load balancers in response to changes in traffic or demand. It is a crucial component in cloud-based infrastructure and is often used in conjunction with auto-scaling of application servers. Here’s how load balancer auto-scaling typically works:

Scaling Policies: You define scaling policies based on the metrics and thresholds that indicate the need to scale the load balancer. These policies determine when and how to scale the load balancer. Common metrics for load balancer scaling include:

- Request rate: Scaling out when the request rate exceeds a certain threshold.

- Connection count: Scaling out when the number of concurrent connections surpasses a specified limit.

- Response times: Scaling based on response time or latency metrics.

Scaling Actions:

- Scale Out: When traffic increases beyond the defined threshold, the auto-scaling system triggers the provisioning of additional load balancer instances or resources. This is known as “scaling out.”

- Scale In: When traffic decreases, and the load on the load balancer decreases below a certain threshold, the system can remove unnecessary load balancer instances to save on costs. This is called “scaling in.”

High Availability: Ensuring high availability is critical. Auto-scaling may also involve adding redundant load balancer instances in different availability zones or regions to minimize downtime.

Load Distribution: When scaling out, the new load balancer instances are added to the load balancing pool, distributing traffic evenly across all active load balancers.

Traffic Monitoring: Load balancer auto-scaling begins with continuous monitoring of incoming traffic. This involves collecting data on request rates, connection counts, and other relevant metrics.

Notifications and Alerts: Configure notifications and alerts to keep administrators or DevOps teams informed of scaling events or unusual conditions.

The benefits of SLB Auto scale:

Traffic Variability: Traffic to web applications and services can vary significantly throughout the day and across different days. During peak hours or unexpected traffic spikes, the demand for resources can increase dramatically. Load balancing and auto-scaling help handle this variability efficiently.

High Availability: To ensure that services remain available and responsive even when individual servers or components fail, load balancers distribute traffic to healthy instances. Auto-scaling ensures that additional instances are provisioned automatically in case of failures or increased demand.

Cost Efficiency: By scaling resources up or down based on demand, you can reduce operational costs. Auto-scaling ensures that you pay for only the resources you need, which is particularly important in cloud computing where resources are often billed on an hourly or per-minute basis.

Optimizing Resource Utilization: Auto-scaling allows you to match resource capacity (e.g., CPU, memory, network) to the current demand. This prevents over-provisioning, where you have too many resources during periods of low traffic, which can lead to unnecessary costs.

Performance Optimization: Load balancers distribute traffic evenly, which can improve the performance of your application or service. Additionally, auto-scaling can add more resources when needed, further enhancing performance during traffic spikes.

Geographical Distribution: For global applications, load balancing can distribute traffic to servers in different geographic locations to reduce latency and improve user experience. Auto-scaling allows for dynamic adjustments in each region to handle local demand.

Failover and Redundancy: Load balancers can route traffic away from unhealthy servers or regions, improving system reliability. Auto-scaling ensures that replacement instances are available in case of server or instance failures.

Scalability: As your business grows, load balancer auto-scaling makes it easier to scale your infrastructure by adding more servers or instances. It simplifies the process of handling increased demand without manual intervention.

Simplified Management: Auto-scaling and load balancing reduce the administrative burden of manually provisioning and configuring servers or instances based on traffic predictions. This automation streamlines operations.

Dynamic Workloads: Many modern applications have dynamic workloads, with traffic patterns that change rapidly. Auto-scaling and load balancing are designed to handle such dynamic environments effectively.

Resilience: Auto-scaling distributes workloads across multiple instances, reducing the risk of a single point of failure. Even if one instance or server becomes overloaded or experiences issues, the system can continue to function.

Load balancer auto-scaling ensures that your applications and services remain available and responsive even during traffic spikes while also optimizing costs by automatically adjusting the load balancer capacity when traffic decreases. It’s a vital component of a scalable and highly available infrastructure in the cloud.

Radware Alteon Cluster Manager for Auto scale

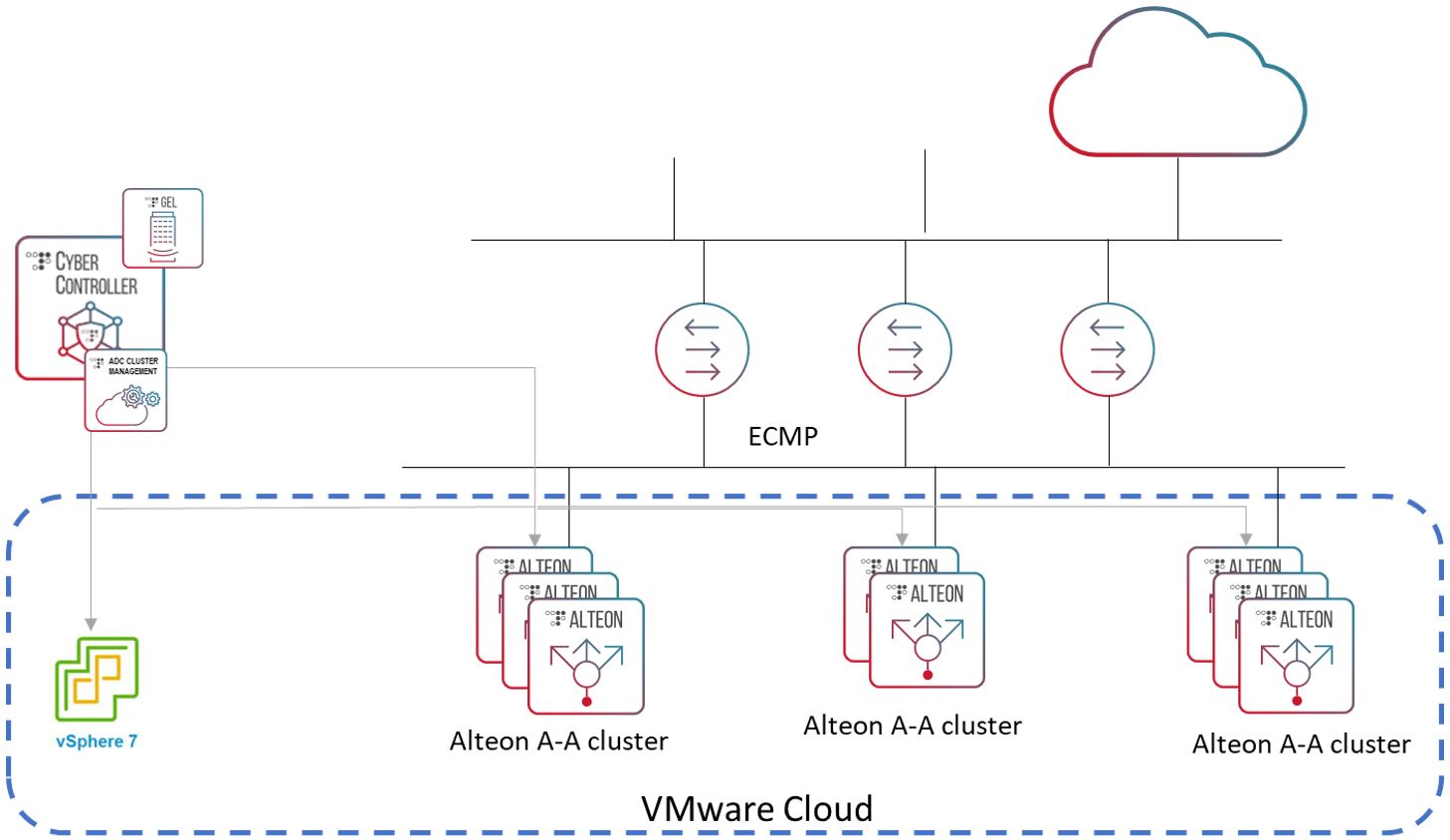

The new Alteon Cluster Management capability, available in Radware Cyber Controller starting with version 10.0.2.0, allows for provisioning, management, and maintenance of Alteon auto-scaling clusters in the VMware environment.

The Alteon auto-scaling cluster enhances the scalability and efficiency of the ADC service and allows handling the following type of scenarios:

Planned or un-planned traffic surge: When the ADC cluster is suddenly presented with a significant increase in traffic that exceeds the current cluster capacity, additional Alteon instances are temporarily provisioned to handle that increase. Once the traffic load goes back down, the additional instances are automatically decommissioned.

Gradual growth: An ADC cluster can start with a couple of Alteon devices that meet the initial traffic needs. As the application traffic increases, the scaling cluster handles it by automatically adding more Alteon instances to meet the requirement.

The Alteon Cluster Management component is included in Cyber Controller version 10.0.2.0 and performs the following functions:

Cluster scale out/in: Monitors the resource utilization (CPU and throughput) on the cluster members and based on the thresholds specified by the user, triggers a scale out or scale in event.

Cluster provisioning: Provisions on the VMware cloud the Alteon instances required for the cluster.

Deploying and updating ADC Services: All Alteon configurations, ADC service level and device level, must only be performed via Cyber Controller, to ensure that the configuration is identical on all cluster members.

Radware’s Alteon Cluster Manager combined with a global elastic licensing (GEL) model, organizations can purchase one single license of ADC capacity, and dynamically break it down to as many ADC instances as required, allocate capacity per instance – as required, scale up or down that capacity, and even decommission ADC instances, using their capacity in another environment – as required.

For more information

If you are wondering how, you can deploy a Cluster manager for auto scale with Alteon ADC and get the benefit from the full feature set of Alteon, go or more information and please feel free to contact one of our ADC professionals here.