As a Solution Architect at Radware, I’ve frequently been involved in designing networking solutions tailored for demanding applications.

We’ve seen growing demand for high-performance Kubernetes networking solutions from a variety of sectors.

For example:

- A government agency with critical low-latency performance requirements for sensitive workloads.

- A large financial institution running latency-sensitive trading applications that must meet strict SLA targets.

- A telecom service provider building a 5G core infrastructure that requires near-line-rate packet processing and network isolation at scale.

These kinds of environments demand more than standard CNI plugins can provide. To meet their performance and determinism needs, we’ve leveraged advanced networking techniques such as SR-IOV and OVS-DPDK in combination with Multus CNI to build flexible, high-throughput, and low-latency architectures.

One recurring challenge has been optimizing the Alteon Kubernetes Connector (AKC)—Radware’s ingress controller—for customer environments requiring high packets per second (PPS) and low-latency performance.

In many cases, traditional container networking approaches—such as standard CNIs and Open vSwitch (OVS)—fell short of meeting these high-performance needs. To address this, we had to rethink and rearchitect the networking stack for both the AKC ingress controller and the application pods running in Kubernetes.

This blog explores that journey. We’ll dive into virtual and high-performance networking technologies like OVS, OVS-DPDK, and SR-IOV, examining how they can be leveraged to build a robust, low-latency ingress architecture.

We’ll begin with an overview of these networking technologies, weighing their pros and cons. Then, we’ll walk through a traditional ingress controller deployment and demonstrate how it can be transformed to meet the requirements of performance-critical workloads.

Virtual Networking technologies

The table below summarizes common networking techniques used to enhance performance in virtualized and containerized environments. Each method offers a different balance between flexibility, scalability, and raw throughput.

Common Techniques in VMs

| Method |

Description |

Performance Use Case |

| OVS (Open vSwitch) |

A software switch supporting VLANs and tunnels |

Standard switching, NAT |

| OVS-DPDK |

Userspace OVS with Intel’s DPDK for ultra-low latency |

Telco, NFV, real-time traffic |

| SR-IOV |

Expose NIC’s virtual functions (VFs) to VMs for near-native speed |

Scalable and performant |

Common VM Networking Options

1. Open vSwitch (OVS)

- How it works: Kernel-space switch with advanced features (VLAN, QoS, tunneling).

- Performance: Medium

- Latency: Medium

- Best for: Layer 2/3 switching, VLAN segmentation, SDN setups

- Optimizations: Multi-queue virtio-net, interrupt balancing, CPU affinity

2. OVS-DPDK

- How it works: Bypasses kernel, processes packets in user space using DPDK.

- Performance: High

- Latency: Low

- Best for: NFV, telco workloads, high-throughput VMs

- Optimizations:

- Enable hugepages

- CPU pinning to isolate workloads

- Use vhost-user for VM communication

- NUMA-aware resource allocation

3. SR-IOV

- How it works: Splits a single physical NIC into multiple Virtual Functions (VFs)

- Performance: Very High

- Latency: Very Low

- Best for: Multi-VM, high-throughput network functions

- Optimizations:

- Bind VFs to VMs

- CPU isolation

- NUMA-aware tuning

Performance Summary Table

| Method |

Performance |

Latency |

Optimizations Needed |

Use Case |

| OVS |

Medium |

Medium |

Medium |

SDN, VLAN setups |

| OVS-DPDK |

High |

Low |

High |

Telco, NFV, low-latency VMs |

| SR-IOV |

Very High |

Very Low |

High |

Multi-tenant, fast virtual networks |

General Optimization Techniques

- CPU Pinning: Isolate VM vCPUs from noisy host processes

- Hugepages: Reduce TLB misses, improve memory throughput

- NUMA Awareness: Align NICs and vCPUs to same NUMA node

- Interrupt Affinity: Bind interrupts to specific CPUs

- Multi-queue Networking: Enable multiple virtqueues for virtio-net

To effectively integrate high-performance networking in modern infrastructures, it's essential to understand how each technology behaves in both VM-based and Kubernetes pod-based environments. While the core concepts remain the same, their implementation and impact can vary significantly depending on the workload type. The table below compares the typical usage and behavior of OVS, OVS-DPDK, SR-IOV, and PCI Passthrough in both VMs and Pods.

| Technology |

VM Networking |

Pod Networking |

| OVS |

VM connects via a virtual NIC to a Linux bridge or OVS |

Pods connect via default CNI bridge or OVS bridge |

| OVS-DPDK |

VM uses vhost-user to connect to OVS-DPDK for high-speed switching |

Pods can use DPDK-enabled CNIs (e.g., with Multus) to bind to OVS-DPDK |

| SR-IOV |

VF (Virtual Function) assigned directly to VM NIC |

VF assigned directly to pod via SR-IOV CNI plugin |

Container Network Interfaces (CNIs) are essential for providing network connectivity to pods in Kubernetes. While most common CNIs focus on simplicity and compatibility, high-performance CNIs are designed to support technologies like SR-IOV and OVS-DPDK, enabling low-latency and high-throughput networking for demanding workloads. The table below compares standard CNIs with high-performance CNIs based on their capabilities and use cases.

| CNI Plugin |

Type |

Supports SR-IOV |

Supports OVS-DPDK |

Typical Use Case |

| Calico |

Standard CNI |

❌ |

❌ |

Policy enforcement, scalable networking |

| Flannel |

Standard CNI |

❌ |

❌ |

Simple overlay networking |

| Cilium |

Standard CNI (eBPF) |

❌ (native) |

❌ |

Security and observability with eBPF |

| Multus |

Meta CNI |

✅ (via delegate) |

✅ (via delegate) |

Multi-NIC, chaining high-performance CNIs |

| SR-IOV CNI |

High-Performance |

✅ |

❌ |

Direct NIC access for low-latency pods |

| OVS-DPDK CNI |

High-Performance |

❌ |

✅ |

Ultra-low-latency DPDK-based networking |

Multus CNI is a meta-plugin that enables Kubernetes pods to attach multiple network interfaces by chaining other CNIs. It is essential for integrating high-performance networking technologies like SR-IOV and OVS-DPDK. SR-IOV allows direct access to hardware NICs for near-native speed and low latency, while OVS-DPDK leverages user-space packet processing for ultra-fast data plane performance. Together, they provide the flexibility and speed required for demanding workloads in cloud-native environments.

The SR-IOV (Single Root I/O Virtualization) CNI plugin allows Kubernetes pods to directly use Virtual Functions (VFs) from a physical NIC. This provides near-native performance by bypassing the host's software network stack. It’s ideal for low-latency and high-throughput workloads, such as telecom, finance, or real-time apps.

The OVS-DPDK CNI plugin connects pods to a DPDK-accelerated Open vSwitch, enabling user-space packet processing for ultra-fast switching and routing. It reduces kernel overhead and is used in NFV, edge computing, and performance-sensitive Kubernetes environments.

The Problem with Single-Network Clusters

In traditional Kubernetes setups using a single network interface, all types of traffic — including:

- Pod-to-pod communication

- Ingress/egress traffic

- Management/control plane operations

- Data plane traffic (e.g., user packets, telemetry, media) — are multiplexed through the same network interface and bridge.

This becomes a performance bottleneck, especially when:

- You're handling high packets per second (PPS).

- Your workloads are latency-sensitive (e.g., VoIP, real-time analytics, NFV).

- You need strict traffic isolation (e.g., data plane vs control plane).

Why Multus Helps

With Multus, you can attach dedicated interfaces for specific traffic types, such as:

- A default CNI for Kubernetes control traffic.

- An SR-IOV interface for high-performance data traffic.

- A DPDK-enabled interface for fast packet processing.

This allows:

- Traffic segregation

- Better QoS

- Reduced contention

- Improved reliability and observability

Real-World Example:

A telecom workload might use:

- eth0 (via Calico) → for Kubernetes API, DNS, etc.

- eth1 (via SR-IOV) → for data sessions with near-zero latency

Without Multus:

You're pushing everything through eth0, risking:

- Packet drops

- Increased latency

- Control plane congestion

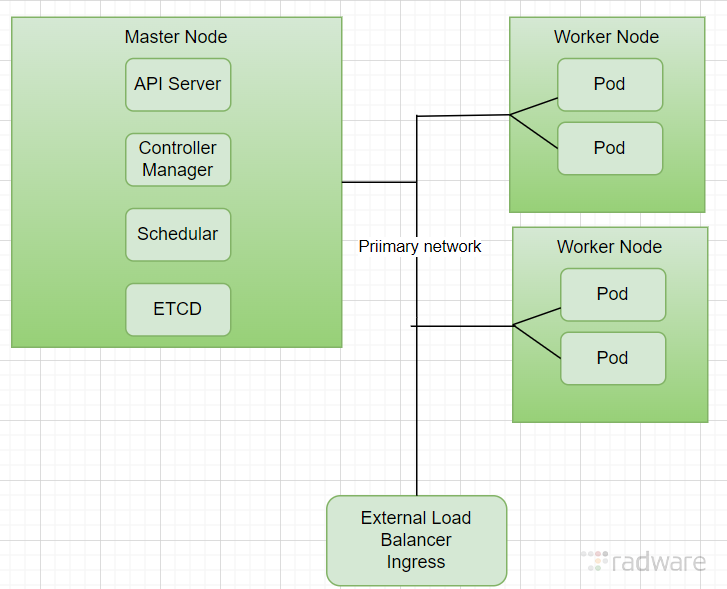

Typical Cluster without Multus CNI:

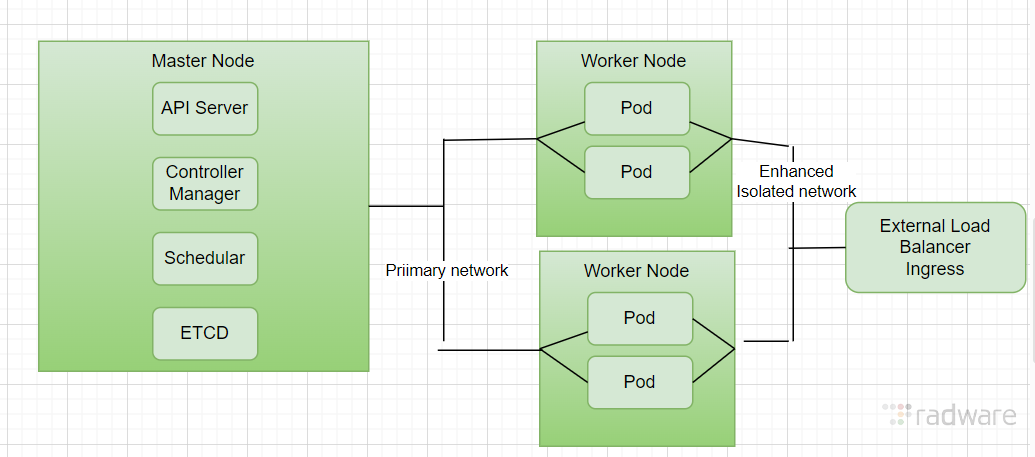

Cluster with Multus CNI:

The Alteon Kubernetes Connector

When running an application inside a Kubernetes cluster, you need to provide a way for external users to access the applications from outside the cluster.

You can use an ingress controller to route traffic from outside to your Kubernetes cluster through a load balancer. It simplifies the network traffic management for your Kubernetes cluster and directs the requests to the right services, in other words, it connects the incoming requests for your applications to the specific server that handles them.

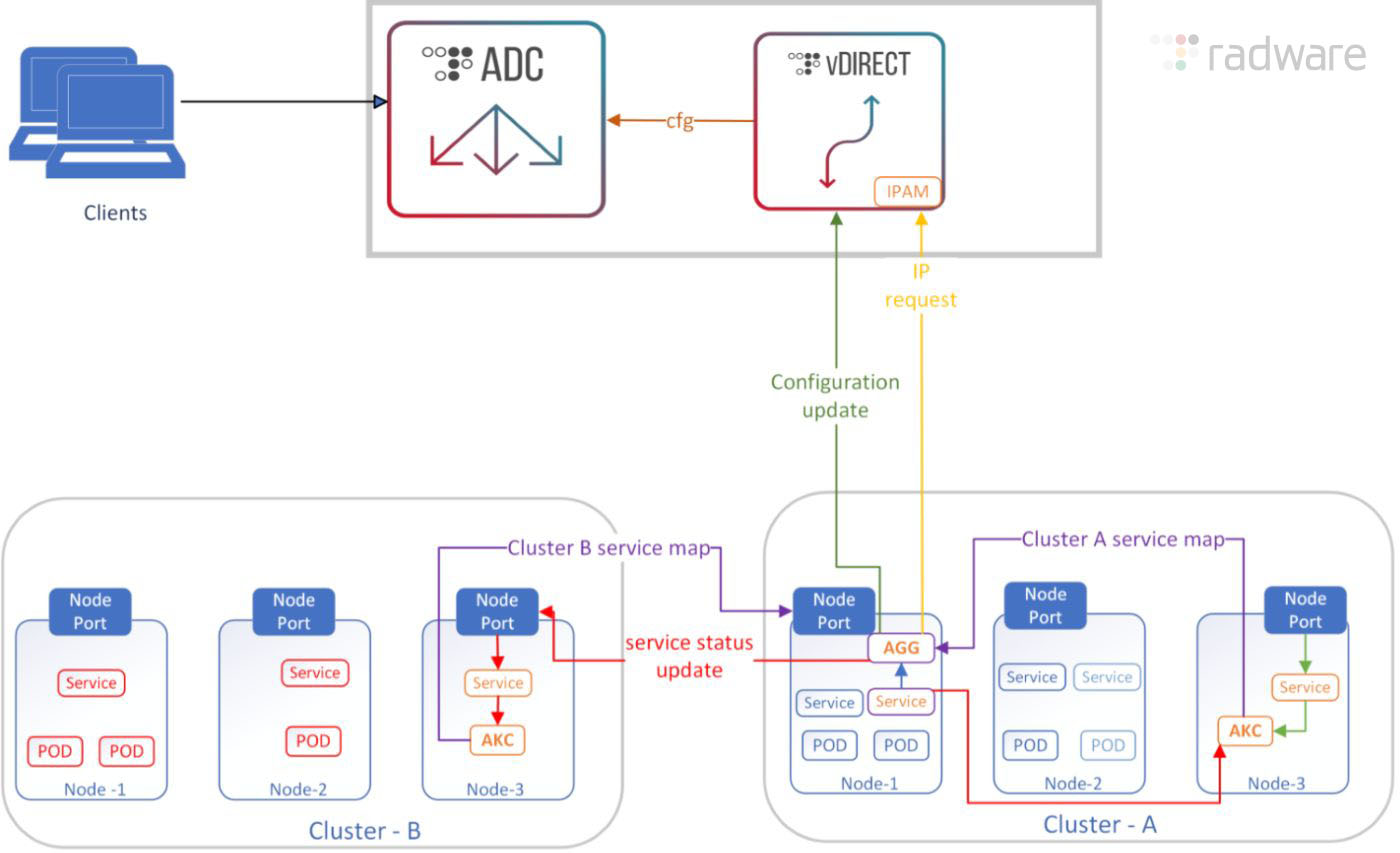

The Alteon Kubernetes Connector (AKC) is a solution that integrates Alteon ADC with Kubernetes/OpenShift orchestration, allowing you to automatically load balance traffic for your Kubernetes workloads.

The Alteon Kubernetes Connector (AKC) discovers services in the Kubernetes cluster and translates them to Alteon ADC configuration. It also looks for the addition or removal of nodes/pods from the Kubernetes clusters and communicates the changes to Alteon to keep the Alteon configuration in sync.

Using AKC ability to discover and aggregate services that runs in multiple clusters, Alteon can provide load balancing and high availability between clusters.

The AKC has three components:

- The AKC Controller - discovers the service objects in the Kubernetes clusters. Such a component is required in each of the participating Kubernetes clusters.

- The AKC Aggregator - aggregates inputs from all the controllers and communicates the necessary configuration changes to AKC Configurator.

- The AKC Configurator - prepares Alteon configuration file and pushes it to the Alteon device.

In addition, the solution uses the vDirect module in Cyber Controller (or APSolute Vision), which handles IPAM service, required to allocate service IP (VIP) from an IP pool (currently a single pool is supported).

AKC Deployment Architecture

Key Components for Enhancing Networking Performance:

Virtual Machines:

Alteon ADC and the Cyber Controller run as virtual machines, allowing their network interfaces to be upgraded to high-performance options such as SR-IOV or OVS-DPDK.

Pod Connectivity within the Kubernetes Cluster:

To enable advanced networking, the cluster transitions from traditional CNI to Multus CNI, SR-IOV CNI or OVS-DPDK CNI, which supports multiple interfaces. This allows both AKC pods and customer application pods to connect using enhanced networking technologies like SR-IOV and OVS-DPDK.

This blog explores how to optimize ingress networking in Kubernetes for high-performance applications using technologies like OVS, OVS-DPDK, SR-IOV, and PCI Passthrough. Based on real-world experience at Radware, it outlines the limitations of traditional CNI-based networking for latency-sensitive workloads and demonstrates how to redesign both Ingress Controllers (AKC) and application pods to support enhanced networking.

The blog compares networking techniques across VMs and Kubernetes pods, highlights the role of Multus CNI in enabling multiple high-performance interfaces, and presents key trade-offs between flexibility, performance, and complexity. Tables and diagrams help visualize how each technology fits into modern cloud-native architectures, guiding readers in building scalable, high-throughput ingress solutions for demanding environments.

Relying on a single network interface in a Kubernetes cluster can quickly become a bottleneck in high-performance environments. Multus CNI addresses this by enabling dedicated interfaces for data traffic, ensuring that performance-critical workloads are not hindered by control-plane congestion or CNI limitations.