With the information security industry suitably distracted by the attacks against Sony, there was a notable story that slipped quietly under the radar last week. On December 22, the New York Times reported that this summer’s breach at JP Morgan Chase was the result of a single server that got overlooked in a system-wide upgrade of security. Such an oversight is not entirely uncommon for vast enterprises managing tens-of-thousands of assets as part of their corporate network. Unfortunately, this instance resulted in a point of entry for what at the time looked like a contender for the “cyber-attack of 2014,” a title now firmly in the grips of the Sony incident. Still, the JP Morgan incident is important, not only due to its scope but also the underlying risks it highlights.

The New York Times story includes a detailed description of the exploit according to “people who have been briefed on internal and external investigations into the attack.” Naturally, the breach wasn’t as simple as an intrusion event on a single server. The origin of the compromise was the theft of legitimate login credentials from a JP Morgan employee, which in and of itself could have been managed had the overlooked server been upgraded with two-factor authentication along with the rest of the corporate network.

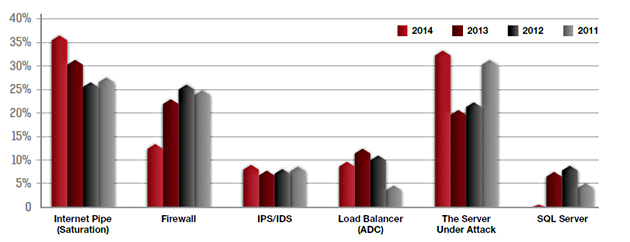

The apparent tenacity of the attackers to seek out and exploit this server should come as no surprise. Testing a wide array of network-facing assets until finding the vulnerable weak link is a clear trend, as evidenced in our 2014-2015 Global Application & Network Security Report published last month. Consider the graphic below, which shows how a number of different technologies and layers of the network become frequent targets, with attackers often jumping from asset-to-asset until they find one they can knock over.

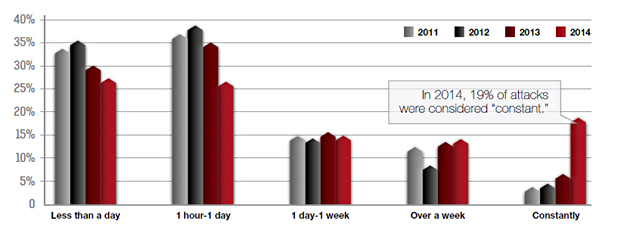

Further evidence of attacker tenacity can be seen in the growth in duration of attacks. Based on our survey results, 19% of attacks are now “constant” . . . this is a more than 300% from previous years. Troubling too is the fact that nearly half (48%) of attacks last more than one day, yet only 52% of respondents reported the ability to defend against attacks for more than one day.

Another trend evident in this year’s report is the continued migration of Enterprise IT into the cloud. While the JP Morgan incident wasn’t reported to be related to use of cloud services, exploits like this can reasonably be expected to increase as more IT assets and sensitive data leave the corporate network.

Much of the adoption from the enterprise market will undoubtedly focus on hybrid cloud deployments, a leveraging of dedicated (single tenant) cloud-based systems working in conjunction with public Internet elements and assets. Such deployments do have clear security advantages over pure public cloud deployments leveraging multi-tenancy. But in the end they still put the reliance of security in the hands of a service provider. Considering the situation at JP Morgan, where you’ve got large, sophisticated IT and security operations overlooking the security of a network-facing asset, one shudders to think of the potential loss of control.

Organizations adopting cloud computing and “as-a-service” models need to be diligent about understanding the security capabilities of their vendors. Enterprises need to also consider the potential for becoming the virtual “collateral damage” of an attack targeting other customers of the service providers they use.

Radware works with number of cloud service providers to help them manage secure environments, and offer their customers added layers of security to their hosted applications. It is important to explore not just the advertised security services enabled by a hosting provider, but also the underlying technology they are using to deliver security.

What are your company’s plans for adoption of private and hybrid cloud services and how are you adapting your security policies to account for the loss of direct control?