Rate limiting is a technique used to control the rate at which requests are made to a service by a client or an application. It is a strategy for managing and regulating traffic flow to ensure that the service remains available and responsive. This is achieved by placing restrictions on the number of requests that can be made over a specified period of time.

A useful tool for preventing resource abuse and ensuring fair use of services, rate limiting can be used to control traffic flow to web servers, APIs, or other online services. By setting a limit on the number of requests that can be made within a specific time frame, it can prevent the overloading of servers and reduce the risk of downtime or slow response times.

Rate limiting is particularly important in preventing DDoS attacks which involve sending a flood of requests to a server or service to overwhelm its capacity and cause it to crash. It can also be used to block or delay requests from a single IP address or client that exceeds a specified threshold, making it more difficult for attackers to overwhelm the server or service.

In summary, rate limiting is a method of controlling traffic flow to a service or server by restricting the number of requests that can be made within a certain time frame. It is an essential technique for preventing resource abuse, ensuring fair use of services and protecting against DDoS attacks.

Rate-Limiting API

API rate limiting is used to control the rate at which requests are made to an Application Programming Interface (API) by a client or an application. An API is a set of protocols and tools for building software applications that allows different applications to interact with each other. Rate limiting is an important mechanism for managing API traffic and ensuring that the service remains available and responsive.

This technique works by setting a limit on the number of requests that can be made within a specific time frame. For example, an API provider might set a rate limit of 100 requests per minute for a specific endpoint. Once a client or application reaches this limit, any further requests are blocked or delayed until the next time window begins.

API rate limiting can help prevent overloading of servers, reduce the risk of downtime and ensure that resources are used fairly among all clients or applications. It can also help to protect against DDoS attacks, where attackers attempt to overwhelm an API by sending a flood of requests.

API rate limiting can also be implemented using different techniques, such as setting time-based limits, using quotas or tokens, or using dynamic algorithms to adjust the rate limit based on the load on the system. Some API providers may also offer different rate limits for different endpoints or different levels of service.

In summary, API rate limiting is a method of controlling traffic flow to an API by setting limits on the number of requests that can be made within a specific time frame. It is an essential technique for managing API traffic, protecting against resource abuse and ensuring fair use of API resources.

Which Attacks Are Prevented by Rate Limiting?

Rate limiting plays a critical role in protecting against a variety of attacks that attempt to overwhelm a system by sending an excessive number of requests. By controlling the rate at which requests are made, rate limiting can prevent resource exhaustion, reduce the risk of downtime and protect against different types of attacks.

Three types of attacks that can be prevented by rate limiting are:

1. DDoS attacks - Distributed Denial of Service (DDoS) attacks involve sending a massive number of requests to a system or service to overwhelm it, causing it to become unresponsive or unavailable. Rate limiting can help to prevent DDoS attacks by blocking or delaying requests from a single IP address or client that exceeds a specified threshold, making it more difficult for attackers to overwhelm the system.

2. Brute force attacks - Brute force attacks involve repeatedly attempting to guess login credentials or other sensitive information by using automated tools to send a large number of requests. Rate limiting can help to prevent brute force attacks by limiting the number of login attempts that can be made within a specific time frame, making it more difficult for attackers to guess valid credentials.

3. API abuse - API abuse involves sending a large number of requests to an API with the intention of extracting large amounts of data or causing resource exhaustion. Rate limiting can help to prevent API abuse by limiting the number of requests that can be made by a single client or application, ensuring that API resources are used fairly among all clients and preventing resource exhaustion.

Rate limiting is a critical technique for preventing attacks that attempt to overwhelm a system or service by controlling the rate at which requests are made. It can help to protect against DDoS attacks, brute force attacks, API abuse and other types of attacks that rely on excessive traffic or resource consumption.

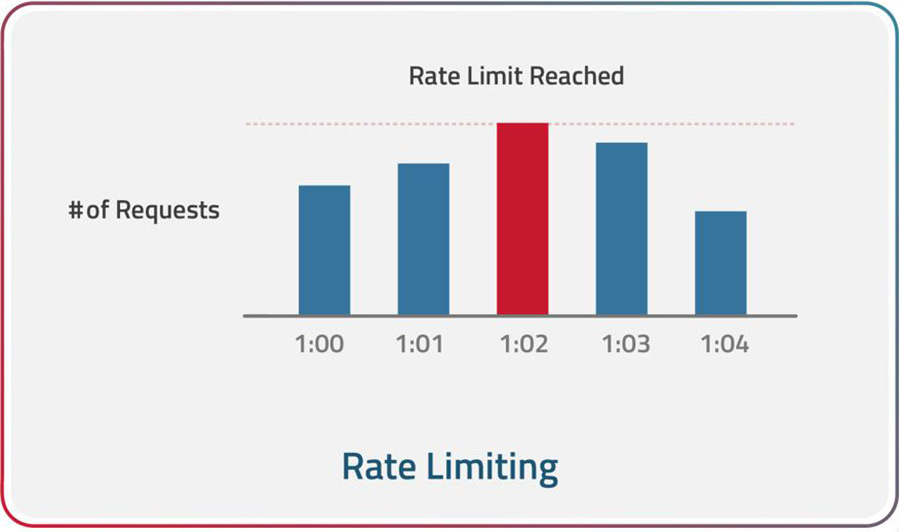

Rate limiting works by controlling the rate at which requests are made to a system or service. It sets a limit on the number of requests that can be made within a specific time frame, typically measured in seconds, minutes, or hours. When the limit is reached, the system or service may either delay or reject further requests until the next time window begins.

Rate limiting can be implemented in several ways, including:

Token bucket algorithm - This algorithm involves adding tokens to a bucket at a fixed rate. When a request is made, a token is removed from the bucket. If no tokens are available, the request is delayed or rejected.

Leaky bucket algorithm - This algorithm involves filling a bucket at a fixed rate and allowing requests to drain from the bucket at a fixed rate. If the bucket overflows, the requests are delayed or rejected.

Rolling window algorithm - This algorithm involves counting the number of requests made within a sliding time window, typically measured in seconds or minutes. The window slides forward with each request, and requests that exceed the limit are blocked or delayed.

Fixed window algorithm - This algorithm involves counting the number of requests made within a fixed time window and blocking or delaying requests that exceed the limit.

Implementing rate limiting correctly can be challenging, as it requires balancing the need for availability and responsiveness with the need to prevent resource abuse and protect against attacks. Rate limiting needs to be carefully tuned to ensure that it does not block legitimate traffic, under-protect against attacks or cause other harm to your business.

Furthermore, rate limiting needs to be adapted to the specific requirements and characteristics of the system or service being protected. For example, a service with high peak loads may require different rate-limiting strategies than a service with consistent, low-level traffic.

There are several different types of rate limiting, each with its own advantages and limitations. The three main types of rate limiting are IP-based, server-based and geography-based.

1. IP-based rate limiting: IP-based rate limiting works by setting limits on the number of requests that can be made by a single IP address within a specific time frame. This type of rate limiting is commonly used to prevent DDoS attacks, as it can block or delay requests from a single IP address that exceed a specified threshold.

- Advantages: IP-based rate limiting is easy to implement and can be effective in preventing attacks from a single IP address. It can also be used to limit the amount of traffic that comes from a specific IP address to prevent resource exhaustion.

- Limitations: IP-based rate limiting can be circumvented by attackers who use multiple IP addresses or dynamic IP addresses. It can also block legitimate traffic from a shared IP address, such as those from a company or university.

- Example scenario: An online retailer implements IP-based rate limiting to prevent bots from scraping its website and stealing its product data. The rate limit is set to 10 requests per minute per IP address to prevent abusive behavior, while still allowing legitimate traffic to access the site.

2. Server-based rate limitingServer-based rate limiting works by setting limits on the number of requests that can be made to a specific server within a specific time frame. This type of rate limiting is commonly used to prevent resource exhaustion and ensure fair use of server resources.

- Advantages: Server-based rate limiting is effective in preventing resource exhaustion and ensuring fair use of server resources. It can also be used to manage peak loads and ensure that the server remains responsive during times of high traffic.

- Limitations: Server-based rate limiting can be circumvented by attackers who distribute their requests across multiple servers. It can also block legitimate traffic if the limit is set too low or if the server is overloaded.

- Example scenario: A music streaming service implements server-based rate limiting to prevent its API from being overloaded with requests during peak times. The rate limit is set to 100 requests per second per server to ensure fair use of server resources while maintaining responsiveness.

3. Geography-based rate limiting: Geography-based rate limiting works by setting limits on the number of requests that can be made from a specific geographical region within a specific time frame. This type of rate limiting is commonly used to prevent attacks from specific regions, such as those where a large number of malicious requests originate.

- Advantages: Geography-based rate limiting can be effective in preventing attacks from specific regions and can help to reduce the risk of resource exhaustion. It can also be used to ensure compliance with legal and regulatory requirements in specific regions.

- Limitations: Geography-based rate limiting can be circumvented by attackers who use proxy servers or VPNs to hide their location. It can also block legitimate traffic from a specific region, such as those from users who travel frequently or use virtual machines.

- Example scenario: A social media platform implements geography-based rate limiting to prevent spam and abusive behavior from a specific region known for high levels of malicious activity. The rate limit is set to 10 requests per minute per IP address originating from that region to prevent abusive behavior while still allowing legitimate traffic to access the platform.

There are several algorithms used in rate limiting, each with its own advantages and limitations. Here are three examples:

1. Token Bucket Algorithm

The Token Bucket Algorithm works by imagining a bucket that fills up with tokens at a constant rate, and each request takes a certain number of tokens. Requests are allowed if there are enough tokens in the bucket, and tokens are added back to the bucket at the constant rate.

Example: A web server implements the Token Bucket Algorithm to limit the number of requests from a single IP address to 50 per minute. The server fills the bucket with tokens at a rate of 10 per minute, and each request takes one token. If an IP address tries to make a request when there are no tokens in the bucket, the request is blocked.

2. Leaky Bucket Algorithm

The Leaky Bucket Algorithm works by imagining a bucket that has a hole in the bottom. Requests fill the bucket, and requests are allowed as long as the bucket is not full. If the bucket fills up, excess requests overflow and are discarded. The bucket is emptied at a constant rate.

Example: An email service implements the Leaky Bucket Algorithm to limit the number of emails sent by a single user to 10 per hour. The bucket can hold up to 10 emails, and each new email fills the bucket. If the bucket is full, excess emails are discarded. The bucket is emptied at a rate of one email per six minutes.

3. Fixed Window Counter Algorithm:

The Fixed Window Counter Algorithm works by counting the number of requests that occur within a fixed time window, such as a minute or an hour. If the number of requests exceeds a set limit, further requests are blocked until the next time window.

Example: A REST API implements the Fixed Window Counter Algorithm to limit the number of requests per minute to 100. If a client sends more than 100 requests in a minute, further requests are blocked until the next minute starts.

Radware's rate-limiting solution is designed to protect against various types of attacks, including DDoS attacks, brute force attacks and web scraping. The solution works by applying different rate limits to various types of traffic, such as HTTP/HTTPS, DNS and SIP to prevent resource abuse and ensure the availability of critical services.

Radware uses advanced algorithms to dynamically adjust the rate limits based on the current traffic conditions. The solution leverages machine learning and behavioral analysis to detect abnormal traffic patterns and apply the appropriate rate limit to prevent attacks while allowing legitimate traffic to pass.

Radware also provides granular control over the rate limits, allowing administrators to set different limits for different types of traffic and specific IP addresses. The solution can also be configured to generate alerts and notifications when rate limits are exceeded, allowing administrators to take proactive measures to prevent attacks.

Radware uses positive and negative security to provide complete protection against web application attacks, access violations, attacks disguised behind CDNs, API manipulations, advanced HTTP-based assaults, brute force attacks on login pages and more. This combination allows for precise policy definitions, which helps to prevent both false positives and false negatives.

The negative security model relies on updated signatures that can detect and block known vulnerabilities in application vulnerabilities. In contrast, the positive security model is useful in preventing zero-day attacks. It does so by allowing the definition of value types and ranges for all client-side inputs, including encoded inputs, and structured formats such as XMLs and JSONs. Positive security profiles restrict user input to the minimum level required for proper application functionality, effectively preventing zero-day attacks.

Positive security model is designed to allow only known and trusted traffic to pass through while blocking everything else. This is achieved by defining a set of rules that explicitly permit traffic based on the characteristics of the traffic, such as the source IP address, destination IP address, port number and protocol type. For example, if a web server is being attacked with a DDoS attack, the positive security model can be implemented to allow only legitimate traffic from known sources to access the server. This means that requests from IP addresses that are not on the whitelist will be blocked or limited, effectively mitigating the DDoS attack.

On the other hand, the negative security model is designed to block known and suspected malicious traffic while allowing everything else to pass through. Rate limiting can also be used as a mechanism to control the amount of traffic allowed to pass through based on these rules.

For example, if a web server is being targeted by a specific type of attack, such as SQL injection, the negative security model can be implemented using rate limiting to block or limit requests that match the pattern of the attack. This means that requests that are suspected to be malicious will be blocked or limited, effectively mitigating the attack.