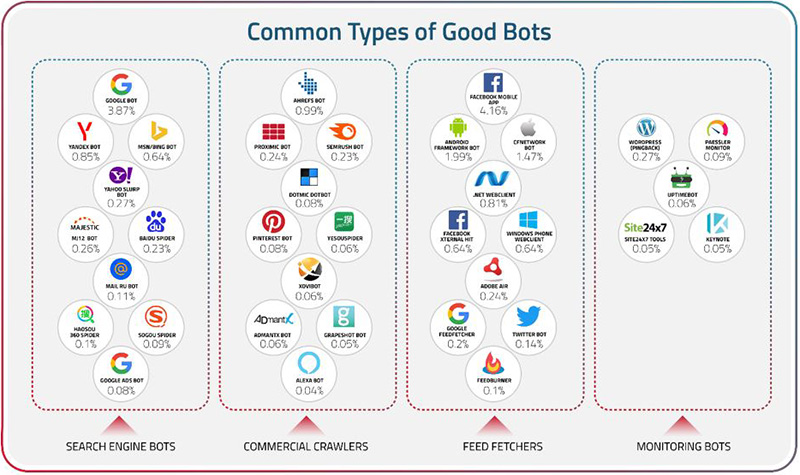

Good bots are automated programs that are beneficial to businesses as well as individuals. When you search for a website or phrases related to a website's products or services, you get relevant results listed in the search results page. This is made possible with the help of search engine spider bots, also known as crawler bots (such as GoogleBot, Bingbot, and Baidu Spider, to name a few).

Good bots are generally deployed by reputable companies, and for the most part they respect rules created by webmasters to regulate their crawling activity and indexing rate, which can be defined in a website’s robots.txt file for crawlers to see. Certain crawlers can also be prevented from indexing websites if they are not useful or needed by the business. For example, the Baidu crawler can be blocked if a business does not operate in China and/or does not cater to the Chinese market.

Apart from search engine crawlers, good bots also include partner bots (e.g., Slackbot), social network bots (e.g., Facebook Bot), website monitoring bots (such as Pingdom), backlink checker bots (e.g., SEMRushBot), aggregator bots (like Feedly), and more. Even good bots such as crawlers can cause problems at certain times, such as when their traffic increases and starts to reach the limits of server capacity, or when their volumes result in skewed analytics.

Search engine crawlers

These bots or spiders crawl web pages to index them for search engines such as Google and Bing. Website administrators can specify non-binding rules in their ‘robots.txt’ file for crawlers to follow while indexing web pages, such as their crawl rates and pages or sections that they should not index. Spoofed crawlers are generally hard for webmasters to detect, as they can often sneak through basic security measures.

Chatbots and virtual assistants

Chatbots and virtual assistants are AI-powered programs that simulate human speech and allow people to communicate with computers in a way that feels natural and conversational. They are used by many organizations to better serve their customers by handling frequently asked questions, providing relevant knowledge articles and resources to address customer inquiries, and helping customers fill out forms and do other routine procedures. In the case of more complex inquiries, these automated self-service agents can often relay those requests to a live human agent. During times of uncertainty and emergency, customer service operations powered by artificial intelligence (AI) can be invaluable to businesses, helping customer service or human resources call centers keep up with spikes in demand and reduce customer wait times and frustration.

E-commerce bots

E-commerce bots are automated programs that can perform a variety of tasks to help online businesses run more efficiently. Some e-commerce bots are designed to perform price comparison, inventory management, and make recommendations. These bots let users search through millions of items, compare prices, set alerts for price drops, and save items for later viewing or purchasing. There are also inventory management systems that can help streamline the process of tracking and managing products, orders, and stock levels. These systems can offer features such as centralized inventory management, demand forecasting, multi-warehouse support, batch and expiration tracking, and inventory reorder alerts.

Monitoring bots

Monitoring bots are used to monitor the uptime and system health of websites. These bots periodically check and report on page load times, downtime duration, and so on. For example, Pingdom is a site monitoring service that helps you keep an eye on your website’s uptime. Such bots can be used to determine if resources are available and behaving as expected by sending requests to a resource to see whether it responds.

Data analysis bots

Data analysis bots are used for web scraping, data mining, and sentiment analysis. Web scraping involves collecting web data and information in an automated manner using a web scraping bot or a scraping application programming interface (API). This data can then be analyzed using techniques such as sentiment analysis, which is a natural language processing (NLP) and machine learning (ML) methodology. Sentiment analysis can reveal the text’s urgency, emotions, feelings, and intentions, depending on the type of sentiment analysis performed. It can help businesses safeguard their reputation, monitor their brand and product reviews, and understand their customers’ needs.

Social media bots

Social media bots are automated accounts capable of posting content or interacting with other users with no direct human involvement. They can also be bots that are run by social networking sites to provide visibility to their clients’ websites and drive engagement on their platforms. These programs use artificial intelligence, big data analytics, and other programs or databases to imitate legitimate users posting content. Social bots essentially automate social media interactions to mimic human activity but perform at a scale not possible for human users.

Social media automation is the process of reducing the manual labor required to manage social media accounts by using automation software. Automating post scheduling, basic customer service, and producing analytics reports can free up hours of time for social media managers to work on higher-level tasks.

There are many good bots that perform a variety of functions. Some popular bots of this type include:

- Googlebot: Google's web crawling bot discovers new and updated pages to be added to the Google index.

- Slackbot: Slack's built-in bot can help users with tasks such as setting reminders and answering questions about Slack.

- Watson Assistant: This is an AI-powered conversational bot by IBM that provides recommendations for further training so it gets better at its job. It can also be integrated with a database service like Db2 to serve dynamic, user-specific content from a database.

- HubSpot Bot: This is a code-free builder that allows users to easily create a custom branded bot and leverage 250 out-of-the-box integrations.

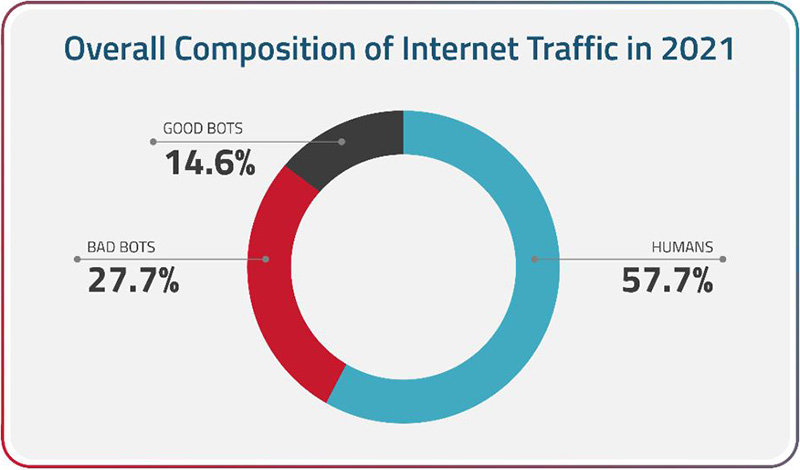

As mentioned above, good bots perform useful or helpful tasks that aren't detrimental to a user's experience on the internet. Popular examples of these bots include search engine bots, copyright bots, site monitoring bots, commercial bots, feed bots, chatbots, and personal assistant bots.

Bad bots, on the other hand, can steal data, break into user accounts, submit junk data through online forms, and perform other malicious activities. They range from credential stuffing bots to scraping bots, spam bots, click fraud bots, and several other types depending on the botmaster’s intentions.

Common ways of handling good bots include:

- Robots.txt - Also known as The Robots Exclusion Protocol, robots.txt is a file located at the root of a website (for example, https://www.radware.com/robots.txt) that lets webmasters set rules for visiting crawlers to voluntarily obey. It is a standard format used by websites to communicate their crawling preferences to web crawlers. Webmasters can specify which sections of a website should not be scanned, such as administrative pages, pages with duplicate content, internal staging pages, and so on. Websites that do not have a robots.txt file are essentially allowing search engine crawlers to index every page on the site without restraint. However, it is important to note that bots can ignore your robots.txt file, as any instructions therein are non-binding—meant to be voluntarily followed—and cannot be technically enforced solely through the robots.txt file.

- Allowlist (or whitelist) – A list of allowed bots. Good bots may be added to an allowlist so that they are not blocked by a bot management strategy.

- Blocklist (or blacklist) – A list of blocked bots. Bad bots may be added to a blocklist so that they are blocked by a bot management strategy.

Specialized bot managers

The above methods of handling good bots are basic and may not necessarily serve organization-specific needs regarding management of good bots. A specialized solution such as Radware Bot Manager, on the other hand, is designed to allow good bots through without hindrance. Depending on the website operator, an exception to this might be in times of very high user traffic, such as during sales periods like Cyber Week or other peak traffic periods.